Computing

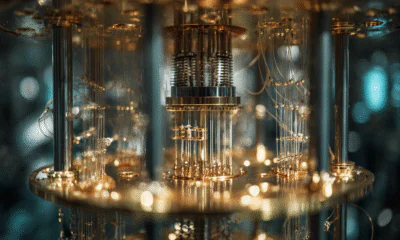

Stratospheric Quantum Data Centers: The Next Cloud

Securities.io maintains rigorous editorial standards and may receive compensation from reviewed links. We are not a registered investment adviser and this is not investment advice. Please view our affiliate disclosure.

What if “cloud computing” becomes literal? Scientists are exploring deploying advanced computers in the stratosphere to address one of the core issues with quantum computing.

If deployed, this unique way to solve the problem can save on cooling costs and completely change the way we know and think of ‘cloud computing.’

TL;DR

-

-

Quantum computers require extreme cooling, and current cryogenic systems make quantum data centers expensive, energy-heavy, and difficult to scale.

-

KAUST researchers propose placing quantum processors on high-altitude airships, using the stratosphere’s naturally cold temperatures to cut cooling demand by up to 21 percent.

-

-

These airborne platforms would rely on solar power, free-space optical links, and relay balloons to connect with ground data centers while offering flexible, movable compute capacity.

-

Early modeling suggests the approach could support more qubits with lower error rates, pointing toward a future where quantum computing and cloud computing converge literally in the clouds.

The Growing Cost of Cooling Quantum Data Centers

Quantum computers are a type of computer that utilizes quantum mechanics to perform complex calculations much faster than classical computers.

Unlike classical computers, which store and process data in bits (i.e., zeros or ones), quantum computers use qubits that can exist in multiple states at the same time, a phenomenon called superposition, and can also be linked together, a phenomenon called entanglement. These properties allow quantum computers to explore many possibilities simultaneously.

With qubits as their fundamental data unit, quantum computers can perform advanced parallel computation and enjoy significantly increased storage capacity. Qubits, however, are very sensitive to environmental noise, such as heat, vibration, and electromagnetic interference.

They are simply very fragile and, as such, are maintained at extremely low temperatures to prevent errors caused by noise and to ensure proper function.

Most quantum systems actually operate at temperatures as low as several mK to 10K.

So, while quantum data centres (QDCs) have the potential to complete a task twice as fast as a traditional one, they consume ten times more energy due to the use of energy-intensive cryogenic cooling systems.

As a result, there is a need to look into the QDCs’ thermodynamic aspects in order to reduce the cooling energy consumption of these data centers.

Some of the main cooling techniques used in data centres for quantum chips include laser cooling, dilution refrigeration, and pulse-tube refrigeration, with advanced technologies such as the use of the magnetocaloric effect (a phenomenon in which magnetic materials heat up when a magnetic field is applied and cool down when the field is removed) in supersolids also gaining momentum.

Another technique involves immersing quantum circuits in the rare cryogenic fluid Helium-3, which becomes a superfluid at extremely low temperatures and exhibits unique quantum properties.

Still, achieving and maintaining cryogenic environments for qubits demands substantial cost and energy, posing a major barrier to quantum computing adoption and scaling up this rapidly emerging technology.

This calls for innovative engineering approaches that can enable high-performance quantum computing.

A study from KAUST researchers has done just that by proposing the deployment of quantum processors on stratospheric High Altitude Platforms (HAPs). The processors will be hosted on airships flying through the stratosphere at an altitude of around 20 kilometers (12.4 miles), where the ambient temperature is -50°C (about -58 °F).

By leveraging these naturally cold conditions, the researchers aim to reduce the cooling demands of QDCs significantly and enable sustainable, high-performance quantum computing.

Turning Airships Into Solar-Powered Cryogenic Data Centers

The new proposal from researchers at Saudi Arabia’s King Abdullah University of Science and Technology (KAUST), published in the journal npj Wireless Technology1, details a novel framework for deploying quantum computers in the stratosphere using airships, or blimps.

It also demonstrates that their unique approach to green, flexibly deployable quantum computing in the upper atmosphere offers superior energy efficiency. Moreover, the system performs better computationally than traditional ground-based data centers.

“By operating above the clouds and weather systems, the airship has access to predictable and unimpeded solar irradiance.”

– Leading author, Basem Shihada of KAUST

In order to leverage the cold conditions of the stratosphere, the team proposes Quantum Computing-Enabled High Altitude Platforms (QC-HAPs). These stratospheric airships will host the quantum devices enclosed in cryostats to maintain the required cryogenic temperature.

Yes, cryostats are still needed to maintain quantum states, but at such a height, the naturally low ambient temperatures drastically reduce the energy needed for cryogenic cooling.

Swipe to scroll →

| Parameter | Ground Quantum Data Center | Stratospheric QC-HAP Airship |

|---|---|---|

| Ambient temperature | ~20–25 °C at ground level, requires deep cryogenic stacks | ≈ −50 °C at ~20 km altitude, easing cryogenic load |

| Cooling energy demand | High, dominated by dilution refrigerators and pulse-tube coolers | Modeling suggests up to ~21% lower cooling demand vs ground QDCs |

| Primary power source | Grid electricity, often from mixed fossil and renewable sources | High-irradiance solar plus lithium–sulfur batteries for nighttime |

| Qubit capacity & errors | Limited by cooling power and noise; higher error rates at scale | Models indicate ~30% more qubits with lower error rates in some architectures |

| Connectivity | Fiber and classical networks; quantum links still experimental | Free-space optical links with RF backup and balloon relays for long-range access |

| Deployment flexibility | Fixed locations, multi-year build cycles and capex | Movable fleet that can shift capacity toward demand hot spots or remote regions |

On top of it, the airships will be equipped with solar panels to convert sunlight into electrical energy and lithium–sulfur batteries to ensure smooth operation through the night and during disruptive weather.

As per the paper, the cosmic rays, high-energy particles produced by the sun, would have a negligible impact on the reliability of stratospheric quantum computing systems, affirming the platform’s stratospheric viability.

The QC-HAPs positioned in the sky will be linked to quantum data centers on the ground.

For this, HAPs would send information encoded in light waves via free-space optical (FSO) communication. For cloudy conditions, radio-frequency links will serve as backup.

To prevent signal degradation and decoherence as data travels through the atmosphere, the team suggests using intermediate, balloon-borne platforms at lower altitudes as relay stations.

The great thing about QC-HAPs is that they can be moved wherever they are needed, whether in demand hotspots or remote regions. This flexible deployment extends quantum computing coverage, alleviates computational bottlenecks, and reduces latency.

Also, they can be linked together to increase overall computing power, forming “a dynamic fleet capable of delivering on-demand, scalable quantum computation services worldwide,” said the study’s co-author, Wiem Abderrahim, who is currently a research fellow at the University of Carthage in Tunisia.

This scalable multi-HAP constellation architecture can overcome individual energy limitations and enhance computational advantages.

According to the researchers’ calculations, their solar-powered solution could reduce cooling demand by 21% compared with equivalent quantum computing centers on the ground.

The researchers used the approach to two leading forms of quantum computing for their maturity, stability, scalability, and coherence time. The reduction in cooling demand varies with qubit architecture because each type operates at a different cryogenic temperature range.

One approach uses qubits based on trapped ions cooled to about 4K (about –269°C). This one got the most benefits from the QC-HAP concept. The other uses superconducting circuits that function at temperatures between 10 and 20 mK.

Their analysis also shows that these quantum-enabled HAPs support 30% more qubits than ground-based QDCs while maintaining lower error rates, especially when leveraging advanced hardware capabilities.

Besides the qubits, the energy savings achieved by the stratospheric quantum system also depend on the data center’s architecture, the study noted.

While powerful, this futuristic concept is a long way from practical implementation, requiring significant advances in quantum computing hardware, such as robust systems to identify and correct errors, particularly during transmission.

There are also the unique characteristics of the stratospheric environment, such as seasonal variations in solar irradiance and weather conditions that impact harvested solar power, and, in turn, affect the energy efficiency of their proposed platform, which require careful consideration.

The study’s focus for future research should be on analyzing how environmental factors affect quantum systems and on developing robust designs for QC-HAP’s real-world rollout.

“Our next steps are to move from the conceptual and analytic stage toward more implementation-focused studies.”

– The study’s co-author, Osama Amin

Looking ahead, the researchers expect aerial quantum solutions to not replace but exist alongside conventional ground-based data centers in a hybrid cloud computing framework.

The Global Race to Make Quantum Computers a Reality

As researchers explore sky-based quantum platforms, major industry players continue advancing the hardware needed for the quantum era that these platforms may eventually support.

IBM (IBM -0.77%), for example, is among those deeply involved in quantum computers, hoping to deliver Starling, a large-scale fault-tolerant quantum computer, before the decade is over.

Recently, the company announced the development of new quantum processing units (QPUs) that are expected to help them achieve quantum advantage as well as a fully fault-tolerant quantum computer.

With 120 qubits, IBM Quantum Nighthawk is its first new processor that can process 30% more complex quantum calculations than IBM’s previous QPU (R2 Heron). Each of these qubits can connect with the nearest four neighbors thanks to tunable couplers. This framework will enable scientists to explore problems that require 5,000 two-qubit gates, with IBM hoping to have Nighthawk’s future versions delivering up to 10,000 gates by 2027-end.

IBM Loon is the other smaller processor, which has 112 qubits and all the hardware elements required for full fault tolerance to address the high failure rate in qubits. This will help the team learn in advance of Kookaburra, yet another proof-of-concept processor, which will be the first modular-designed QPU to store and process encoded information. It is expected next year.

Additionally, IBM shared that their new format of quantum processor fabrication on a 300mm (12 inches) wafer halves the time needed to build each one while increasing the physical complexity of chips by 10x.

While hardware accelerates, timelines for mainstream quantum vary dramatically across industry leaders.

Quantum computers, according to Intel’s (INTC +8.56%) former CEO, Pat Gelsinger, will become mainstream much more quickly, in about two years, and will mark the end of GPUs. Meanwhile, Nvidia (NVDA +0.67%), a dominant player in the GPU market, has said that it will take two decades for quantum to go mainstream.

“We’re heading into the most thrilling decade or two for technologists,” Gelsinger said in an interview with the FT. He also called quantum computing the “holy trinity” of the computing world, alongside classical and AI computing.

But while Gelsinger also believes that a “quantum breakthrough” will burst the AI bubble, Google’s Sundar Pichai sees it as the next AI boom itself.

The CEO of the world’s third-largest company by market cap of $3.86 trillion said in a recent interview that quantum computing is rapidly approaching a breakthrough moment similar to what AI experienced a few years ago.

“I would say quantum is there, where maybe AI was five years ago. So I think in five years from now we’ll be going through a very exciting phase in quantum.”

– Pichai

And Google is positioning itself aggressively for this shift. According to Pichai:

“We have the state-of-the-art quantum computing efforts in the world…building quantum systems, I think, will help us better simulate and understand nature and unlock many benefits for society.”

Reinforcing this trajectory, just last month, researchers at Google Quantum AI reported the implementation of a surface code2 using three distinct dynamic circuits. This opens new possibilities for the real-world application of the well-known Quantum Error Correction (QEC) technique and could also help develop more reliable quantum computers.

QEC is the way to make these computers work reliably. It is also essential in building fault-tolerant quantum computers, but “implementing QEC is a significant challenge because the error-detecting and correcting circuits are complex and demand extremely precise operations,” said the co-author Matt McEwen.

The surface code in question works by organizing qubits on a 2D grid and then repeatedly checking for faults.

Previously, McEwen worked on a theory proposal showing that there are multiple ways to implement it, in particular demonstrating the feasibility of three distinct dynamic surface code implementations: hex, iSWAP, and walking circuits.

Building on that, the team went on to work on proving that they work in experiments under real-world conditions.

Upon testing, they found that the iSWAP circuits improved the suppression of errors by 1.56 times and the walking circuit by 1.69 times, while the hex circuit did so by 2.15 times.

“The biggest takeaway from our work is confirming that these dynamical circuit implementations work in reality.”

– McEwen

Breakthroughs in qubit stability are also accelerating. Princeton engineers were recently able to extend qubit lifetimes3 in their latest research, which was partially funded by Google Quantum AI.

A big step towards developing useful quantum computers, the engineers created a superconducting qubit that remained stable for more than 1 millisecond, which is three times longer than the strongest existing versions.

“The real challenge, the thing that stops us from having useful quantum computers today, is that you build a qubit and the information just doesn’t last very long,” said co-author Andrew Houck, who’s Princeton’s dean of engineering. “This is the next big jump forward.”

To confirm their qubit coherence improvement, the researchers built a working quantum chip using the new architecture, which is similar to the systems developed by Google and IBM (IBM -0.77%).

The transmon qubit option used relies on superconducting circuits that operate at extremely cold temperatures and offer solid protection from environmental noise. They also work well with today’s manufacturing processes. Increasing the coherence time of these qubits, however, is extremely difficult.

So, the Princeton team redesigned the qubit, using the exceptionally robust tantalum to prevent the energy loss and widely available high-quality silicon as the substrate. This tantalum-silicon chip is not only easier to mass-produce but also outperforms current designs.

Combining these two, along with refining manufacturing techniques, led the team to achieve one of the most significant improvements in the transmon’s history. A hypothetical 1,000-qubit computer can work roughly one billion times better if the industry’s current best design is swapped with Princeton’s design due to its improvements scaling exponentially with system size, said Houck.

Théau Peronnin, the CEO of Alice & Bob, a company developing a fault-tolerant quantum computing system with Nvidia (NVDA +0.67%), recently said that although quantum technology is not yet advanced enough to threaten current cryptographic systems, it could become powerful enough to crack them a few years after 2030.

This poses a threat not only to Bitcoin (BTC +5.27%) and cryptocurrencies but also to all banking encryption. He told Fortune in an interview:

“The promise of quantum computing is an exponential speed-up, but if you zoom out on an exponential [curve], it’s dead flat—and then it’s a vertical wall. So we’re just at the beginning of the inflection. Now, it’s not any more powerful than your smartphone at the moment. But give it a couple of years, and it will be more powerful than the largest supercomputer ever.“

Companies, however, are working on solutions, while researchers are expanding the reach of quantum networks. Last month, researchers from the University of Chicago Pritzker School of Molecular Engineering (UChicago PME) increased the range of quantum connections3 from just a few kilometers to 2,000 km.

“For the first time, the technology for building a global-scale quantum internet is within reach.“

– Assistant Professor Tian Zhong

In their study, the team increased the coherence time of individual erbium atoms from 0.1 milliseconds to over 10 milliseconds, and in one instance, they even hit 24 milliseconds.

The innovation here was building the crystals critical to create quantum entanglement in a different way. For this, they utilized molecular-beam epitaxy (MBE), which is akin to 3D printing. “We start with nothing and then assemble this device atom by atom,“ he added, “The quality or purity of this material is so high that the quantum coherence properties of these atoms become superb.”

Investing in Quantum Tech

IonQ, Inc. (IONQ +0.17%) is a pure-play quantum company that is building and commercializing quantum computers with a focus on trapped-ion qubits. The company offers quantum hardware via major cloud platforms. Making quantum computing more accessible and positioning it well for commercial uptake as quantum moves toward real-world use.

IonQ’s stock performance reflects this, with its shares currently trading at $48.10, down 21% in the past month but up over 18% YTD and 67.56% in the past three years. It has an EPS (TTM) of -5.35 and a P/E (TTM) of -9.21.

IonQ, Inc. (IONQ +0.17%)

As for the company’s financial strength, it reported a revenue of $39.9 million for Q3 2025, up 222% YoY. Its net loss was $1.1 billion, while GAAP EPS was ($3.58) and adjusted EPS was ($0.17).

IonQ had $1.5 billion in cash, cash equivalents, and investments at the end of the quarter.

“We delivered our 2025 technical milestone of #AQ 64 three months early, unlocking 36 quadrillion times more computational space than leading commercial superconducting systems. We achieved a truly historic milestone by demonstrating world-record 99.99% two-qubit gate performance, underscoring our path to 2 million qubits and 80,000 logical qubits in 2030.“

– CEO Niccolo de Masi

During this quarter, IonQ also completed the acquisition of Oxford Ionics and Vector Atomic and was awarded a new contract with Oak Ridge National Laboratory to develop accelerated quantum-classical workflows and advanced energy applications.

Click here for a list of the top five quantum computing companies.

Latest IonQ, Inc. (IONQ) Stock News

IonQ, Inc. (IONQ) is Attracting Investor Attention: Here is What You Should Know

Can IonQ Stock Make You a Millionaire by 2030?

Quantum Stock Meltdown: RGTI, IONQ and QBTS Crash as 2025 Boom Unravels

IonQ and CCRM Announce Strategic Quantum-Biotech Collaboration to Accelerate Development of Advanced Therapeutics

Miss These Genesis Mission Stocks, and You’ll Regret It for a Decade

3 Quantum Computing Stocks I'd Buy Right Now

Investor Takeaways

-

Quantum computing has reached a turning point. The real barriers now aren’t about whether the physics works; they’re more about whether we can actually build these machines at scale. Any breakthroughs that make qubits easier to cool or more stable bring us closer to a system that people will actually use and pay for. In fact, even wild ideas like launching quantum computers into the stratosphere start to make sense if they solve real engineering problems.

-

For investors who want exposure without picking just one company, the smart move would be to focus on those building the foundation. IBM has been in this space long enough to have real know-how on the hardware side of operations. IonQ, on the other hand, is moving fast with trapped-ion technology. Though Nvidia isn’t building qubits for now, quantum computers need serious control systems and computing power around them, and this is exactly what Nvidia does best.

-

In case you’re tracking where this is headed, watch out for a few signs: qubits that stay stable longer, early proof that error correction can scale, successful tests of entanglement over distance, and the rise of hybrid setups that blend quantum processors with traditional computing infrastructure.

Conclusion: When ‘the Cloud’ Becomes Quantum

Quantum computing is making a rapid evolution from a mere laboratory curiosity into a global technology race, where industry giants like IBM, Google, and Nvidia are pushing hardware capabilities to unprecedented levels. Meanwhile, breakthroughs in qubit coherence, quantum error correction, and long-distance entanglement are steadily solving the field’s long-standing challenges.

Amidst this, KAUST’s proposal is working on making “cloud computing“ a tangible reality, powered by natural cryogenic temperatures and perpetual sunlight.

These advances show that we are approaching a historic inflection point. Within the next decade, it’s a very real possibility that quantum computing will finally move from theory to practicality, reshaping encryption, science, and eventually maybe even the meaning of “the cloud“ itself.

Click here for a list of top cloud computing stocks.

References

1. Abderrahim W., Amin O., & Shihada B. Green quantum computing in the sky. npj Wireless Technology 1, Article 5 (2025). https://doi.org/10.1038/s44459-025-00005-y

2. A. Eickbusch, M. McEwen, V. Sivak, A. Bourassa, J. Atalaya, J. Claes, D. Kafri, C. Gidney, C. Warren, J. Gross, A. Opremcak, N. Zobrist, K. C. Miao, G. Roberts, K. J. Satzinger, A. Bengtsson, M. Neeley, W. P. Livingston, A. Greene, R. Acharya, L. Aghababaie Beni, G. Aigeldinger, R. Alcaraz, T. I. Andersen, M. Ansmann, F. Arute, …, A. Morvan et al. Demonstration of dynamic surface codes. Nature Physics, 2025, Article published 17 October 2025. https://doi.org/10.1038/s41567-025-03070-w

3. Gupta, S., Huang, Y., Liu, S., Pei, Y., Gao, Q., Yang, S., Tomm, N., Warburton, R. J., & Zhong, T. (2025). Dual epitaxial telecom spin-photon interfaces with long-lived coherence. Nature Communications, 16, 9814. https://doi.org/10.1038/s41467-025-64780-6