Artificial Intelligence

Physical AI: Investing in the 2026 Humanoid Robot Boom

Securities.io maintains rigorous editorial standards and may receive compensation from reviewed links. We are not a registered investment adviser and this is not investment advice. Please view our affiliate disclosure.

CES 2026 Signals the Shift From Virtual to Physical AI

CES, formerly the Consumer Electronics Show, is the world’s largest and most influential technology exhibition. We previously reported that one of the most important reveals of the show was the first-ever commercialized solid-state battery, produced by Donut Labs.

But another important announcement was made by AI-hardware leader NVIDIA about “physical AI”, which has been presented as NVIDIA’s ChatGPT moment. This is, of course, not entirely a surprise, as the company has been pushing this idea of Physical AI for a while now, realizing that purely “intellectual” LLMs (Large Language Models) are just the first step in the deployment of AI.

In practice, the most impactful role of AI will happen when it interacts directly with the physical world, instead of staying confined to digital and virtual environments.

And this is something that until now, AIs have still struggled to do. The real world is a lot messier than a neat set of data in a spreadsheet, a video, or a search engine. It is often ambiguous, changing, and unexpected.

For all these reasons, a new type of AI, using physical reality and repurposing neural networks in new ways, is needed. This is what NVIDIA’s Cosmos framework is promising.

Why 2026 Could Be the ChatGPT Moment for Robotics

The comparison of 2026 & Physical AIs for robotics to the effect that ChatGPT had on LLMs comes directly from Jensen Huang, CEO and founder of NVIDIA, at CES 2026. You can see his whole speech in the following video:

Huang made this announcement as his company released new open models, NVIDIA Cosmos and GR00T (Generalist Robot 00 Technology), that are used for robot learning and reasoning, Isaac Lab-Arena for robot evaluation, and the OSMO edge-to-cloud compute framework to simplify robot training workflows (more on each of these AI tools below).

Why It Matters?

So far, LLMs, being first language models, have mostly affected reasoning and language-heavy activities, like writing, programming, search, data analysis, translation, customer services, etc.

These are important activities, but only a fraction of the world’s economy.

Many more global activities, and often the most labor-intensive ones, require interaction with the physical world: manufacturing, healthcare, transportation & logistics, farming, mining, domestic tasks, etc.

Which Industries Will Be Transformed First by Physical AI

In theory, all segments of the physical world and economic sectors will be impacted by the spread of robotics. But in practice, some segments will be adopting robotics at a much greater pace and will be more impacted sooner.

Self-Driving Cars

Autonomous vehicles have made great progress in 2025, and are likely getting ready for deployment, depending on authorization from the regulators and a clearer legal framework.

This task is heavy on reasoning, while the actual mechanical actions of a car are relatively simple (2D movements, acceleration, slowing down, signaling). As such, the most important part is a combination of the following elements:

- Strong on-board hardware for edge computing (not dependent on a connection to networks).

- Data and training to match real-world conditions, from general driving rules to rare cases & unexpected changes—like associating a rolling ball with the risk of a child suddenly running onto the road, leading to preemptive deceleration.

- Reasoning vision-language-action (VLA) models convert visual clues into the right actions.

Logistics

Physical AI will impact this field in at least two different ways.

The first is warehouses and supply management. Physical AI authorizes Autonomous Mobile Robots (AMRs) to navigate complex environments and avoid obstacles, including humans, by using direct feedback from onboard sensors. Robotics arms and other manipulation tools also enable them to move goods.

The second is delivery services, which are more similar to autonomous vehicles, with physical AI handling everything from driving to the right address to safely placing goods at the right door, navigating fences, uneven terrain, obstacles, etc.

Manufacturing

Like in warehouses, physical AI in factories needs to deal with a complex environment mixing machines, humans, and now robots.

But in addition, many manufacturing sites will have high-power tools, dangerous products (hot metal, lasers, chemicals, etc.), and much more demanding requirements regarding final quality and efficiency.

While a stuck or faulty warehouse robot can likely be dealt with by a human working nearby, the same error in an assembly line or a complex chemical plant can turn dangerous quickly.

Surgery & Healthcare

For now, most surgery robots like the ones from Intuitive Surgical (ISRG -0.66%) are more like robotic arms controlled by a surgeon than true autonomous robots. This is changing quickly as AI capacity grows:

- XRlabs is using Thor and Isaac for Healthcare to enable surgical scopes, starting with exoscopes, to guide surgeons with real-time AI analysis.

- LEM Surgical is using NVIDIA Isaac for Healthcare and Cosmos Transfer to train the autonomous arms of its Dynamis surgical robot, powered by NVIDIA Jetson AGX Thor™ and Holoscan.

Manual Repetitive Tasks: Humanoid Robots

Most work environments, rooms, and tools are designed to be handled by human hands and bodies. So it would make sense that the ideal design for a robot to replace humans in tedious or dangerous tasks is also a humanoid form.

However, the human body is also a very complex machine, and it is only recently that robots have become mechanically sophisticated enough to replicate human motion properly.

So this might take longer to develop—specifically the gross and fine motor skills, as well as the ability to perceive, understand, reason, and interact with the physical world, no matter what the given task is.

NVIDIA’s Physical AI Stack Explained

Following the idea developed with the programming language CUDA—letting GPUs be used for applications other than graphic rendering, which gave birth to most of the current AI boom—NVIDIA is counting on open models to lead the upcoming boom in physical AI.

This way, NVIDIA should ideally become as much a physical AI stock as it has been an LLM-driven stock in the past 5 years.

The core of NVIDIA hardware and neural network expertise is now being refined into interlocking parts, all finely-tuned to physical AI applications.

NVIDIA Cosmos

Cosmos is “a platform with open world foundation models (WFMs), guardrails, and data processing libraries to accelerate the development of physical AI for autonomous vehicles (AVs), robots, and video analytics AI agents.”

Several robotics and autonomous vehicles companies are already using Cosmos to accelerate the development of their physical AI.

Cosmos is actually several pre-trained models in one, letting robots anticipate how the physical world will react and change, how synthetic data (simulations) convert into real-world video, and how to use a chain of reasoning that relies on real-world physical data and observation.

NVIDIA Isaac-GROOT & IsaacLab Arena

Isaac GR00T N1.6 is a vision language action model built specifically for humanoid robots, offering full-body control and contextual understanding. Robotics companies including Franka Robotics, Neura Robotics, and Humanoid are already using it.

This, combined with the Isaac Lab-Arena, provides a collaborative system for robot policy evaluation and benchmarking in simulation. This way, research labs and robotic companies can evaluate their model performance quickly and compare it to others in a standardized environment.

Importantly, the Isaac model is relatively low in computing requirements, with a $3,500 NVIDIA robotics chip module Jetson AGX Thor, sufficient to run Isaac, bringing the compute hardware cost of a humanoid robot to a very small sum.

NVIDIA® Jetson Thor™ series modules give you the ultimate platform for physical AI and robotics, delivering up to 2070 FP4 TFLOPS of AI compute and 128 GB of memory with power configurable between 40 W and 130 W.

They deliver over 7.5x higher AI compute than NVIDIA AGX Orin™, with 3.5x better energy efficiency.

Boston Dynamics, Humanoid, and RLWRLD have all integrated Jetson Thor into their existing humanoids to enhance their navigation and manipulation capabilities.

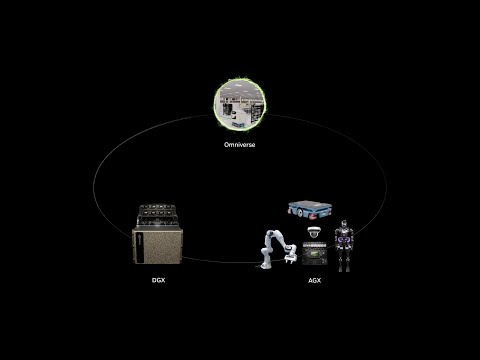

NVIDIA Omniverse

Omniverse is a collection of libraries and microservices for developing physical AI applications such as industrial digital twins and robotics simulation.

Virtual simulations, or “synthetic data”, are a great way to rapidly train a robotic AI in many situations without having to create said situations physically.

To develop Omniverse, NVIDIA used its deep store of physics models, physical simulation, and data library already used for other applications, like physics research, video games, etc.

This sort of tool can also be very useful for logistical and manufacturing applications, with the creation of custom digital twins of real facilities, allowing for testing the deployment of robotic AI virtually first, reducing the risk of disruption when real robots are deployed.

Several industrial companies are already using this tool as well, such as Schneider and Siemens.

NVIDIA OSMO

OSMO is an “orchestrator” software, purpose-built for physical AI.

It allows users to coordinate and combine multiple AI tools, including Isaac and Cosmos, at all stages of a physical AI development: data generation, training, simulation, evaluation, and hardware-in-the-loop testing.

NVIDIA DGX Platform

DGX is NVIDIA’s using its “SuperPOD” computing platform for training AI models, including physical AIs.

It can scale to tens of thousands of NVIDIA GPUs, including Rubin and Blackwell chips, creating a ready-to-run, turnkey AI supercomputer.

NVIDIA – Hugging Face

Hugging Face is a transformers library built for natural language processing applications, also nicknamed the “GitHub for Machine Learning”, with millions of pre-trained AI models, datasets, libraries, etc.

NVIDIA has integrated its open-source Isaac and GR00T technologies into the leading LeRobot open-source robotics framework. With a community of 13 million AI builders, this should boost the adoption of Nvidia systems as a standard in physical AIs.

Conclusion

Robotics is making great progress suddenly, thanks to the conjunction of two different forces at the same time.

The first is the maturity of robotic components technology and the mass production of robotics arms, gyroscope, electric motors, and other components used in robots, as well as in drones and other electronics, leading to a quick decline in costs for high-quality parts.

The second force is the explosive improvement in AI technology.

What reached the general public awareness a few years ago with LLMs is now expanding into new fields, with the real world next to feel the effect of expanding physical AI deployment into automotive, custom-built, or humanoid robot bodies.

It is possible that physical AI turns out even more important for the tech industry than LLMs, as it opens up a whole new series of economic sectors. This should help it capture more value as well as boost productivity, just in time when geopolitics are driving a massive redrawing of the supply chain and reindustrialization of many countries.

Best Physical AI and Humanoid Robot Stocks for 2026

Boston Dynamics / Hyundai (HYMLF)

Hyundai is best known for its automaking activity, justifiably so, as it is the #3 largest car company in the world by number of cars sold, but it is actually also a massive industrial group, formed of 3 subdivisions:

- The car-making activity, including electric cars.

- Robot maker Boston Dynamics, acquired in 2021, not to be confused with Hyundai Robotics, an industrial robot producer now part of the independent company HD Hyundai / Hyundai Heavy Industries (but closely collaborating with Hyundai Motors).

- Hyundai Rotem is active in railroad & military equipment, and hydrogen energy.

Boston Dynamics, alongside Caterpillar, Franka Robotics, Humanoid, LG Electronics, and NEURA Robotics, is using the NVIDIA robotics stack to debut new AI-driven robots.

The company is notably famous for its ATLAS robot and for pioneering the robodog design.

The company is also focusing on the B2B physical robot / physical AI market, with Stretch, a warehouse robot for manipulating packages and loads in warehouses, up to 50 pounds in weight.

As an early leader in robotics and a partner of NVIDIA, Boston Dynamics is a good candidate for grabbing a significant portion of the physical AI market.

An IPO of the company, out of Hyundai, is a distinct possibility in the future, but with no clear plans yet, and therefore likely not before 2027 at least.

“Regarding a timeline or plans for Boston Dynamics’ IPO, nothing has been confirmed yet, so there is not much to comment right now, but we will communicate (with stakeholders) once we have an IPO timeline or plans.

Similar to how we carried out (Hyundai Motor India’s IPO), we can say that we are open-minded about Boston Dynamics. However, we have not reviewed (Boston Dynamics’ IPO) at the moment, and we do not have plans to review (the IPO option) in the short term.”

– Lee Seung-jo, Chief Financial Officer and Chief Strategic Officer of Hyundai Motor Co

The company has started to use Boston Dynamics humanoid robots in its automotive factories and revealed a commercial version of ATLAS.

NVIDIA

From its origin as a GPU hardware maker for video gaming and other graphic rendering tasks, NVIDIA has evolved into a massive AI hardware company, giving its stock the world’s largest market capitalization.

NVIDIA realized AI’s potential early, long before anybody, out of specialized researchers, cared about neural networks.

This was, at the time, a risky move into an unproven, barely existing sector, or as Jensen Huang put it:

“We’re investing in zero-billion dollar markets.”

In 2016 & 2017, NVIDIA released the Pascal and Volta architectures, respectively, the first GPU-based AI accelerator, while Volta introduced the Tensor Cores, which accelerated deep learning tasks by up to 12 times.

This pace of progress has continued ever since.

Investors have been somewhat worried that NVIDIA could soon run out of new markets to justify its high valuation multiples. With the CES 2026 announcement on physical AI, it seems not to be the case yet.

The physical deployment of AI in robots, self-driving cars, and other autonomous systems will provide NVIDIA with many new markets to sell its hardware to.

And its complete ecosystem, with open design and partnership with Hugging Face, almost guarantees that all but the largest tech companies will rely on NVIDIA tech for the brains of their robots, as trying to reinvent the wheel would just be too costly and delay a company too much relative to its competition.